First, we require a definition of information that is robust. Now, there are two robust formulations of information theory, and both of them need to be considered. The first is that of Claude Shannon and, while this is the formulation that most creationists will cite, largely due to apologist screeds erecting various claims about information having to contain some sort of message and therefore requiring somebody to formulate the message, it doesn't robustly apply to DNA, because it's the wrong treatment of information. Indeed, when dealing with complexity in information, you MUST use Kolmogorov, because that's the one that deals with complexity.

So just what is information? Well, in Shannon theory, information can be defined as 'reduction in uncertainty'. Shannon theory deals with fidelity in signal transmission and reception, since Shannon worked in communications. Now, given this, we have a maximum information content, defined as the lowest possible uncertainty.

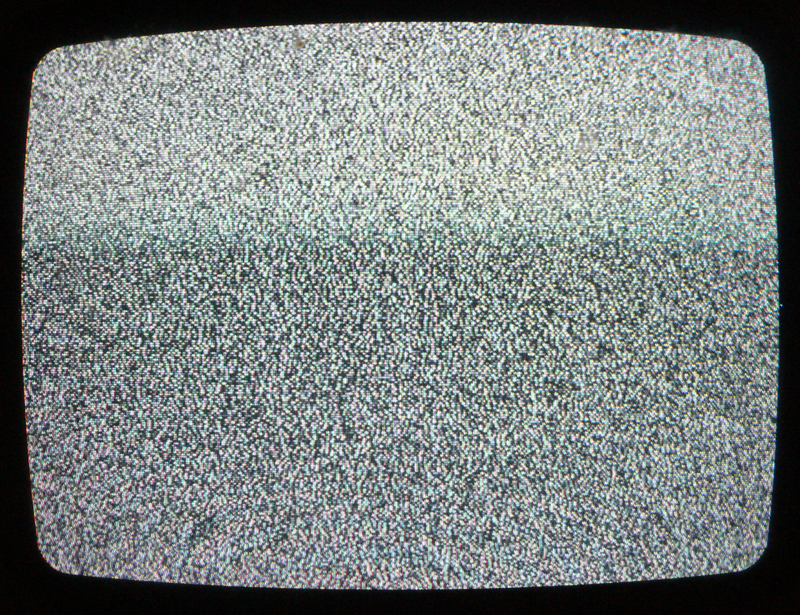

Now, if we have a signal, say a TV station, and your TV is perfectly tuned, and there is no noise added between transmission and reception of the TV signal, then you receive the channel cleanly and the information content is maximal. If, however, the TV is tuned slightly off the channel, or your reception is in some other respect less than brilliant, you get noise in the channel. The older ones of you will remember pre-digital television in which this was manifest in the form of 'bees' in the picture, and crackling and noise in the audio. Nowadays, you tend to get breaks in the audio, and pixelated blocks in the picture. They amount to the same thing, namely noise, or 'an increase in uncertainty'. It tells us that any deviation from the maximal information content, which is a fixed quantity, constitutes degradation of the information source, or 'Shannon entropy'. Shannon actually chose this term because the equation describing his 'information entropy' is almost identical to the Gibbs/Boltzmann equation for statistical entropy, as used in statistical mechanics. See my earlier post on entropy for more on this.

This seems to gel well with the creationist claims, and is the source of all their nonsense about 'no new information in DNA'. Of course, there are several major failings in this treatment.

The first comes from Shannon himself, from the book that he wrote with Warren Weaver on the topic:

The semantic aspects of communication are irrelevant to the engineering aspects.And

The word information, in this theory, is used in a special sense that must not be confused with its ordinary usage. In particular, information must not be confused with meaning. In fact, two messages, one of which is heavily loaded with meaning and the other of which is pure nonsense, can be exactly equivalent, from the present viewpoint, as regards information.So we see that Shannon himself doesn't actually agree with this treatment of information relied on so heavily by the creationists.

The second is that Shannon's is not the only rigorous formulation of information theory. The other comes from Andrey Kolmogorov, whose theory deals with information storage. The information content in Kolmogorov theory is a feature of complexity or, better still, can be defined as the amount of compression that can be applied to it. This latter can be formulated in terms of the shortest algorithm that can be written to represent the information.

Returning to our TV channel, we see a certain incongruence between the two formulations, because in Kolmogorov theory, the noise that you encounter when the TV is slightly off-station actually represents an increase in information, where in Shannon theory, it represents a decrease! How is this so? Well, it can be quite easily summed up, and the summation highlights the distinction between the two theories, both of which are perfectly robust and valid.

Let's take an example of a message, say a string of 100 1s. In its basic form, that would look like this:

111111111111111111111111111111111111111111111111111111111111

1111111111111111111111111111111111111111

Now, there are many ways we could compress this. The first has already been given above, namely 'a string of 100 1s'.

Now, if we make a change in that string,

11111111101111111110111111111011111111101111111110111111111

01111111110111111111011111111101111111110

We now have a string of 9 1s followed by a zero, repeated 9 times. We now clearly have an increase in information content, even though the number of digits is exactly the same. However, there is a periodicity to it, so a simple compression algorithm can still be applied.

Let's try a different one:

1110011110001111110111110001111111111100110011001111000111

111111110111110000111111000111111110011101

Now, clearly, we have something that approaches an entirely random pattern. The more random a pattern is, the longer the algorithm required to describe it, and the higher the information content.

Returning once again to our TV station, the further you get away from the station, the more random the pattern becomes, and the longer the algorithm required to reproduce it, until you reach a point in which the shortest representation of the signal is the thing itself. In other words, no compression can be applied.

This is actually how compression works when you compress images for storage in your computer using the algorithms that pertain to JPEG, etc. The bitmap is the uncompressed file, while the JPEG compression algorithm, roughly, stores it as '100 pixels of x shade of blue followed by 300 pixels of black', etc. Thus, the more complicated an image is in terms of periodicity and pattern, the less it can be compressed and the larger the output file will be.

So, which of the following can reasonably be called 'information'?

From sand dunes, we can learn about prevailing wind directions over time and, in many cases, the underlying terrain just from the shape and direction.

Information?

Theropod wrote: The dogshit can tell us what the dog ate, how much of it ate, how big the dogs anus is, how long ago the dog shat on your lawn, the digestive health of the dog, whether there are parasite eggs in the shit and contain traces of the dog's DNA we can sequence to identify the individual dog. Seems like a lot of information to me. It also seems like more than enough information is present to shoot your assertion down.

Information?

DNA is information in the sense that it informs us about the system, not that it contains a message. It is not a code, more something akin to a cipher, in which the chemical bases are treated as the letters of the language. There is nobody trying to tell us anything here, and yet we can be informed by it.

Information?

About 1% of the interference pattern on an off-channel television screen is caused by the cosmic microwave background.

Information?

This is information in the sense that the squiggles represent more data than would be contained on a blank piece of paper, although even a blank piece of paper is information. In this example, information is defined as the number of bits it would take to represent it in a storage system. As it's fairly random, this is less compression-apt. This is pure Kolmogorov information.

Information?

Of all the information sources in this post, this is the only one that actually contains a message, and is therefore the only one to which Shannon information theory can be applied, as it is the only one that could actually decrease in terms of signal integrity.

Which of the above are information?

Answer: All of them. They are just different kinds of information.

So, when you get asked 'where did the information in DNA come from?' the first thing you should be doing is asking for a definition of 'information'.

What about the genetic code? Scientists talk all the time about the genetic code, and creationists actually quote Richard Dawkins saying that it's digital, like a computer code. How to address this?

Let's start with the claim by Dawkins. What does it actually mean for something to be digital? In the simplest terms, it means that something exists in a well-defined range of states. In computers, we tend to talk about binary states, in which a 'bit' comprises either a 1 or a 0. This is the well-defined range of states for binary. Other digital states are more complex, composed of a greater number of states, but the same principle applies to all of them. I'll link at the bottom to a post by Calilasseia dealing with not only a range of computer codes, but some interesting treatment of what it takes to extract information from them.

Moving on to the 'genetic code', in DNA, we have the nucleobases Cytosine, Adenine, Guanine and Thymine (In RNA, thymine is replaced by uracil (U)). These are the digital states of DNA. We use only the initial letters in our treatment, CAGT. Further, they come in pairs, with C always pairing with G, and A always pairing with T (or with U in RNA).

From here, we can build up lots of 'words', in that when they pair in certain ordered sequences (no teleology here), they produce specific proteins, that go into building organisms (loosely). The point is that this is all just chemistry, while the code itself is our treatment of it. In other words, the map is not the terrain.

DNA is a code in precisely the same way that London is a map.

More here by the Blue Flutterby.

No comments:

Post a Comment

Note: only a member of this blog may post a comment.